Chaos on GKE Autopilot

This guide explains how to set up and run chaos engineering experiments using Harness Chaos Engineering on Google Kubernetes Engine (GKE) Autopilot clusters.

Overview

GKE Autopilot is Google's fully managed Kubernetes service that provides a hands-off experience while maintaining security and compliance. However, Autopilot has specific restrictions compared to standard GKE clusters, including limited permissions and no direct access to nodes.

For additional information about running privileged workloads on GKE Autopilot, see Google Partner Docs and Run privileged workloads from GKE Autopilot partners.

Prerequisites

Before you begin, ensure you have:

- A running GKE Autopilot cluster

kubectlaccess to the cluster with appropriate permissions- A Harness account with Chaos Engineering module enabled

- Cluster admin permissions to create allowlist synchronizers

Step-by-Step Setup Guide

Step 1: Configure GKE Autopilot Allowlist

GKE Autopilot requires an allowlist that defines exemptions from security restrictions for specific workloads. Harness maintains an allowlist for chaos engineering operations that you need to apply to your cluster.

Required permissions: You need cluster admin permissions and kubectl access to apply the allowlist synchronizer.

Apply the allowlist synchronizer to your GKE Autopilot cluster:

kubectl apply -f - <<'EOF'

apiVersion: auto.gke.io/v1

kind: AllowlistSynchronizer

metadata:

name: harness-chaos-allowlist-synchronizer

spec:

allowlistPaths:

- Harness/allowlists/chaos/v1.62/*

- Harness/allowlists/service-discovery/v0.42/*

EOF

Wait for the allowlist synchronizer to be ready:

kubectl wait --for=condition=Ready allowlistsynchronizer/harness-chaos-allowlist-synchronizer --timeout=60s

The allowlist paths include version numbers (e.g., v1.62, v0.42) that may change with Harness updates. If you encounter issues:

- Check the Harness release notes for the latest supported versions

- Update the allowlist paths accordingly

- Contact Harness support for the most current allowlist versions

Step 2: Enable GKE Autopilot Compatibility

After applying the allowlist synchronizer, you need to enable GKE Autopilot compatibility in your existing Harness infrastructure:

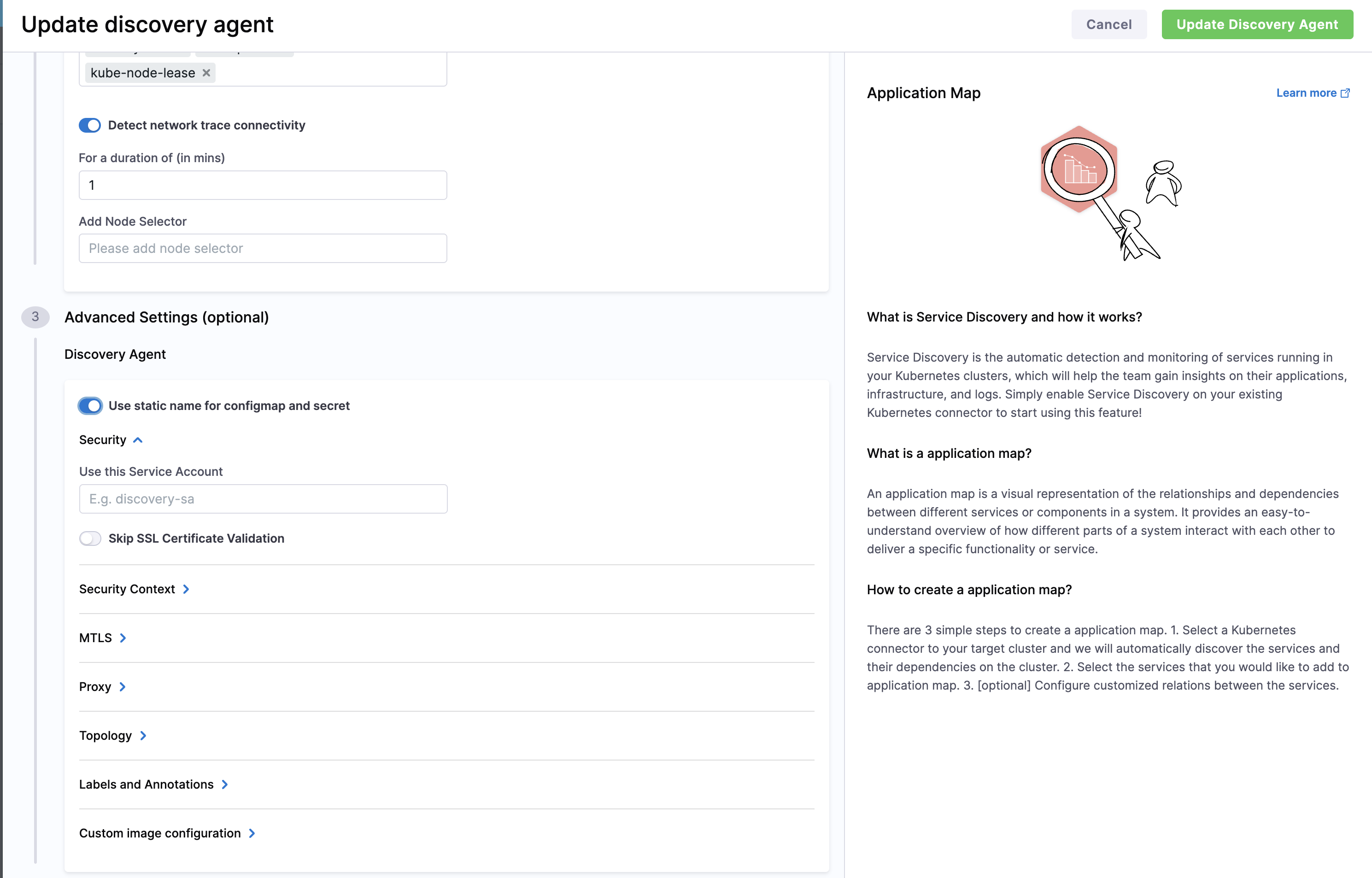

You can also configure the "Use static name for configmap and secret" option for GKE Autopilot compatibility during:

- 1-click chaos setup

- New discovery agent creation

- For existing discovery agents

Configure Infrastructure for GKE Autopilot

-

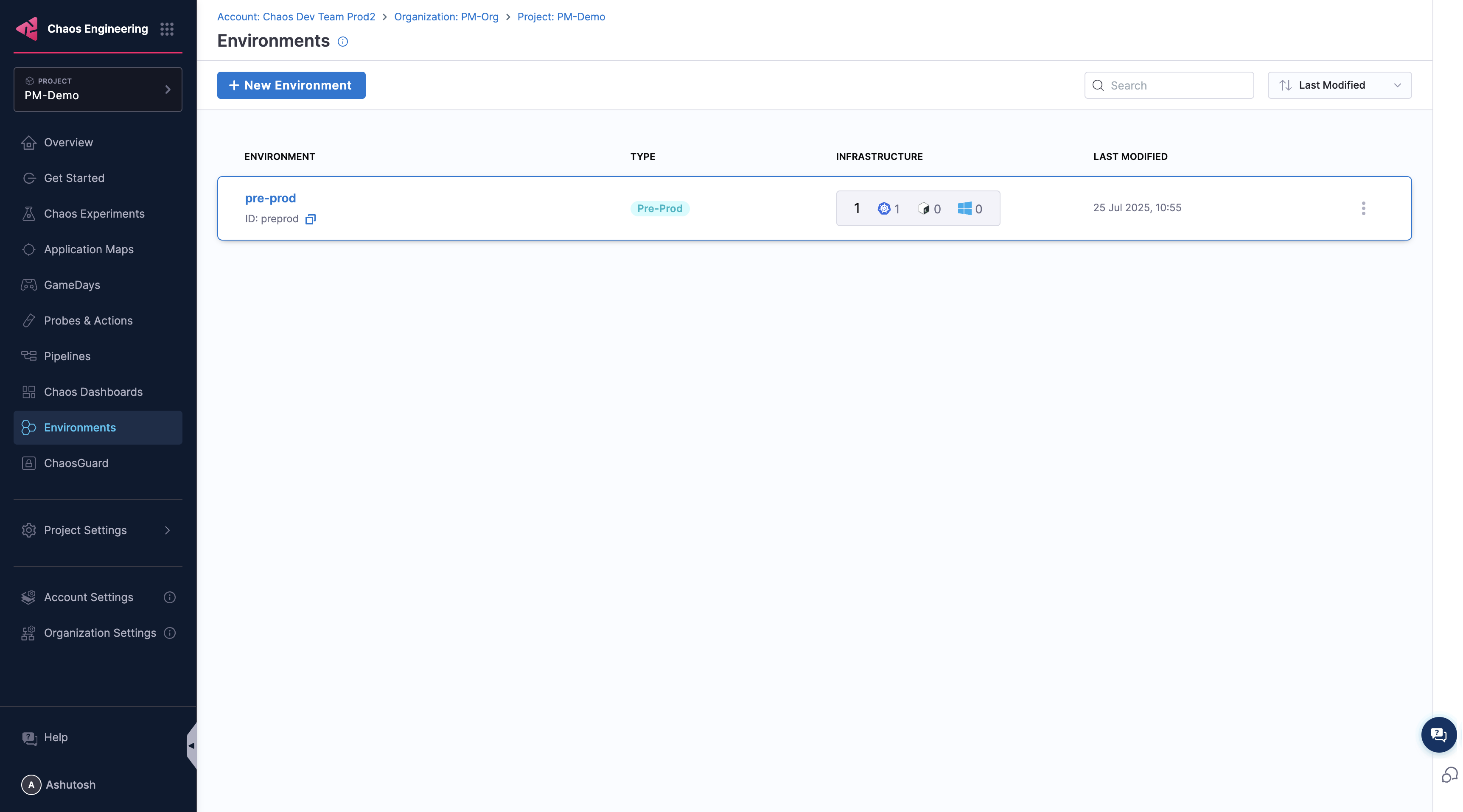

Navigate to Chaos Engineering → Environments and select your environment.

-

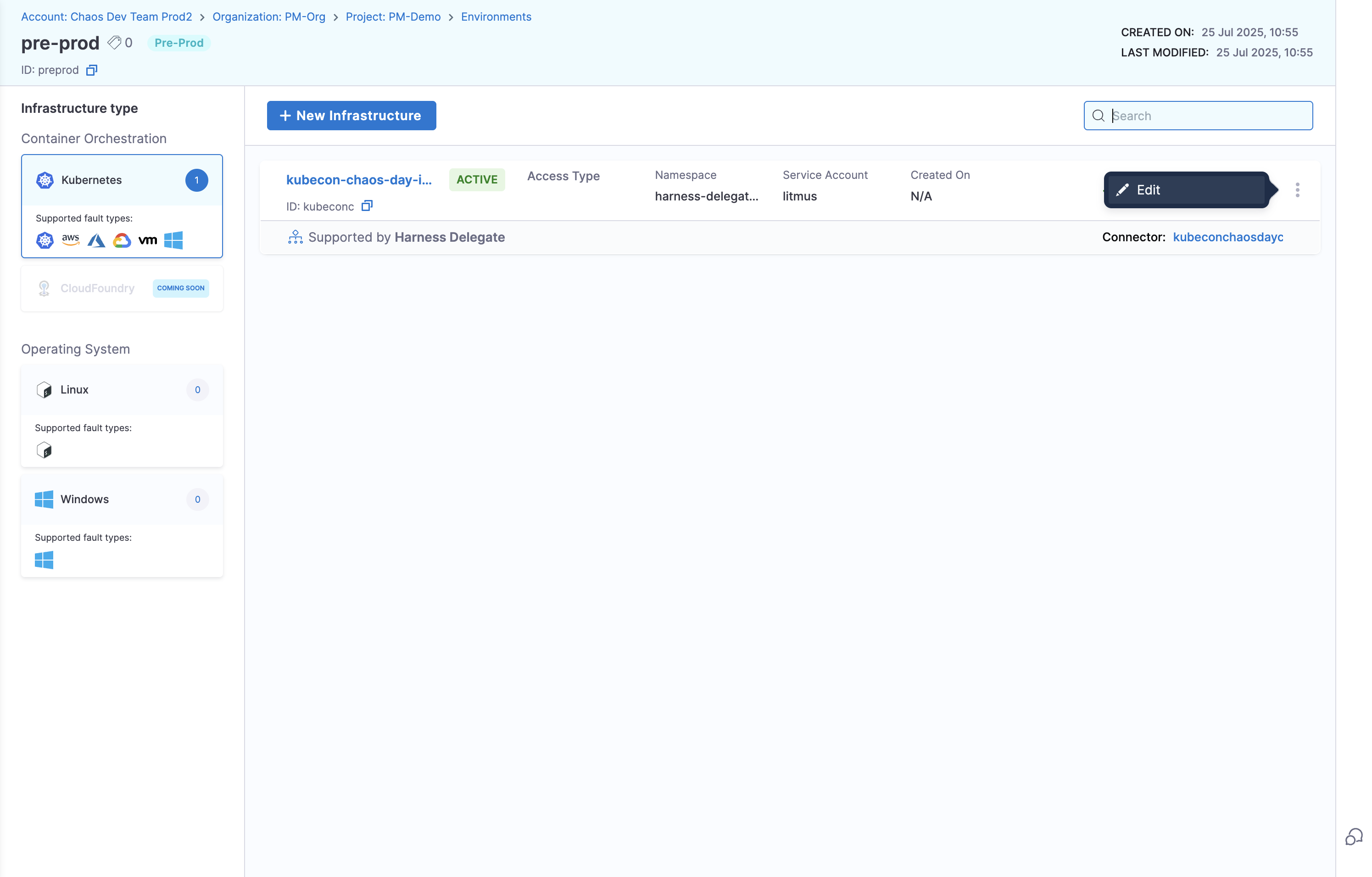

Click the options menu (⋮) next to your infrastructure and select Edit Infrastructure

-

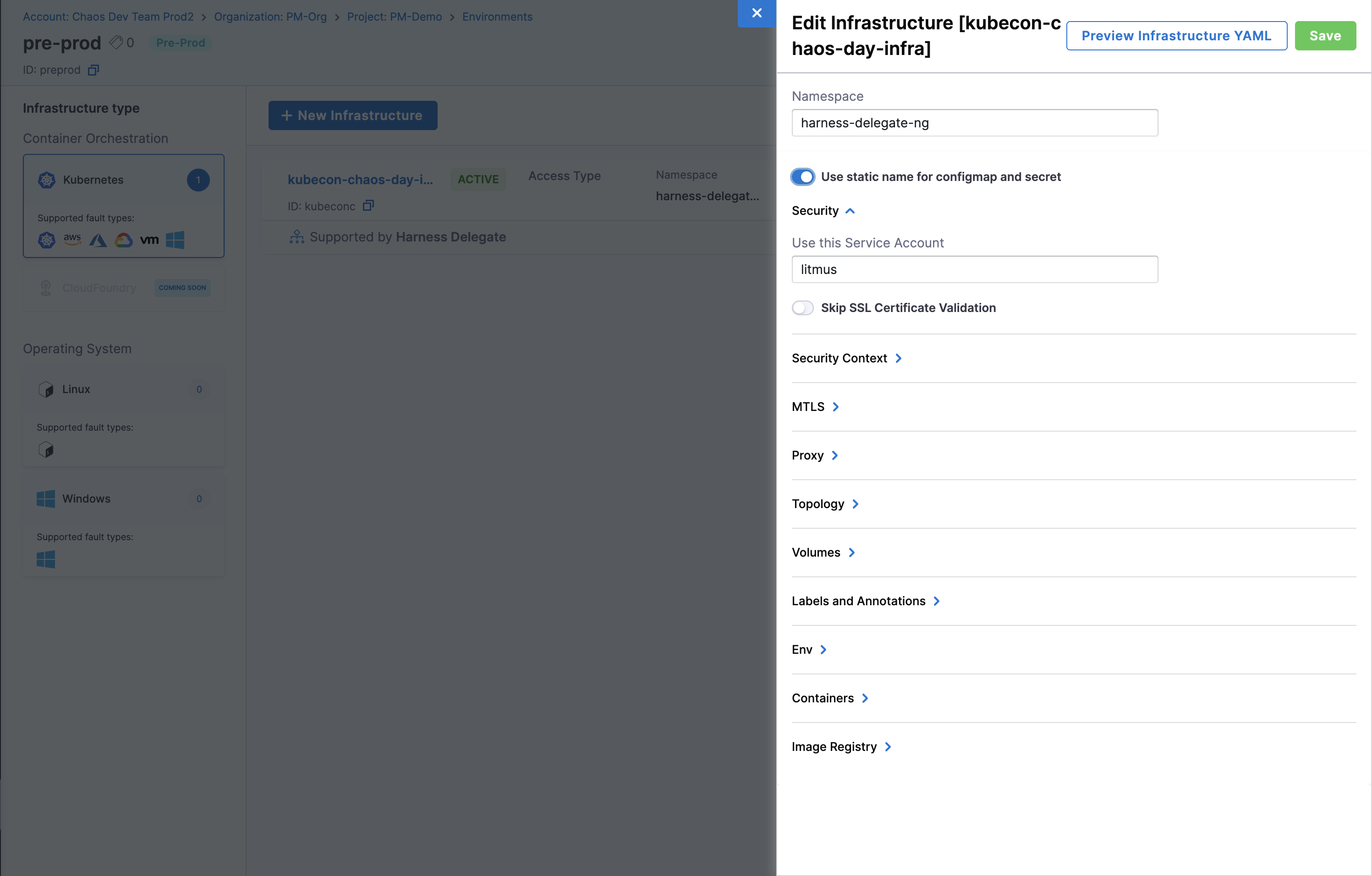

Toggle on "Use static name for configmap and secret" and click Save

Configure Service Discovery

-

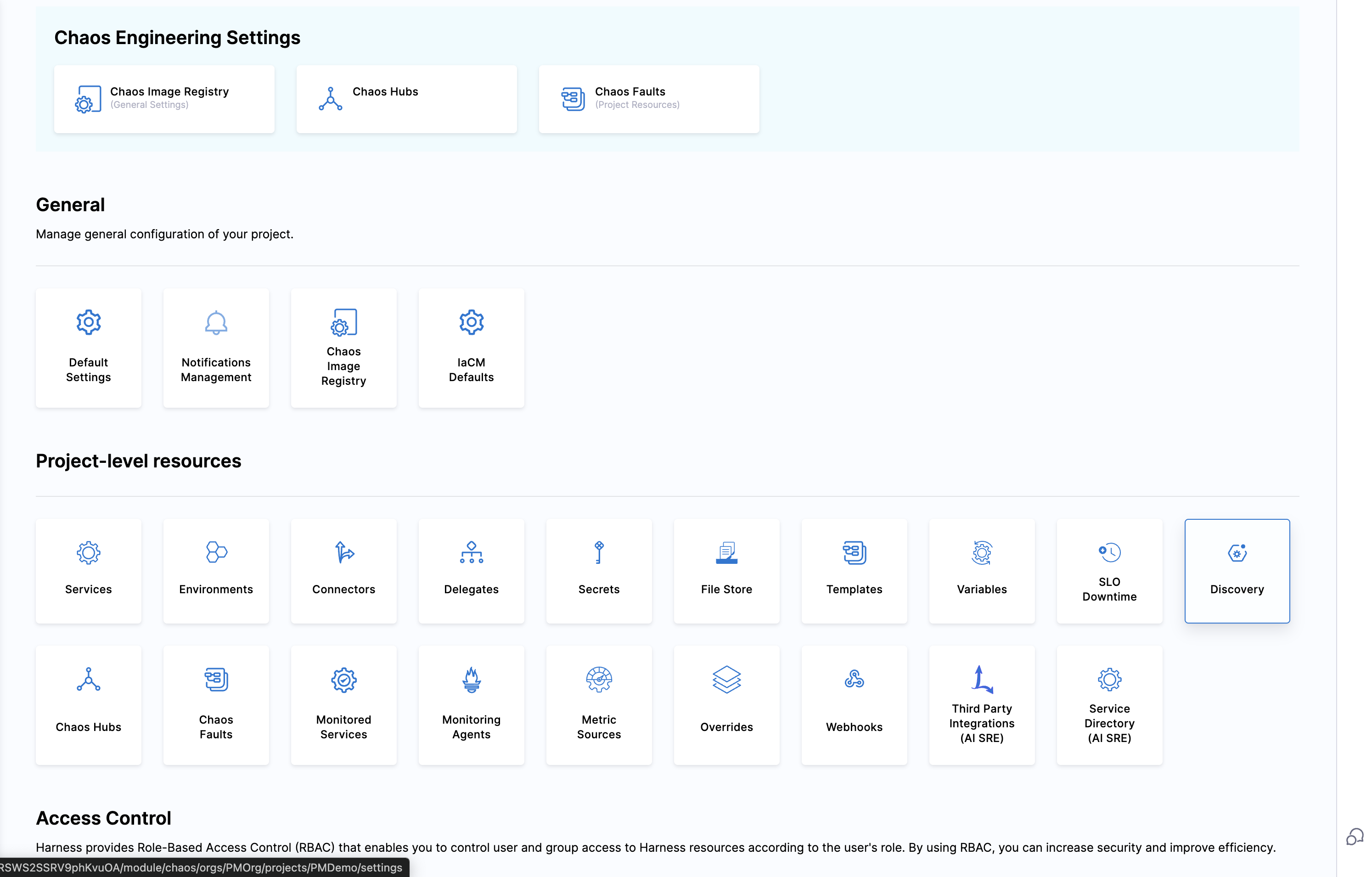

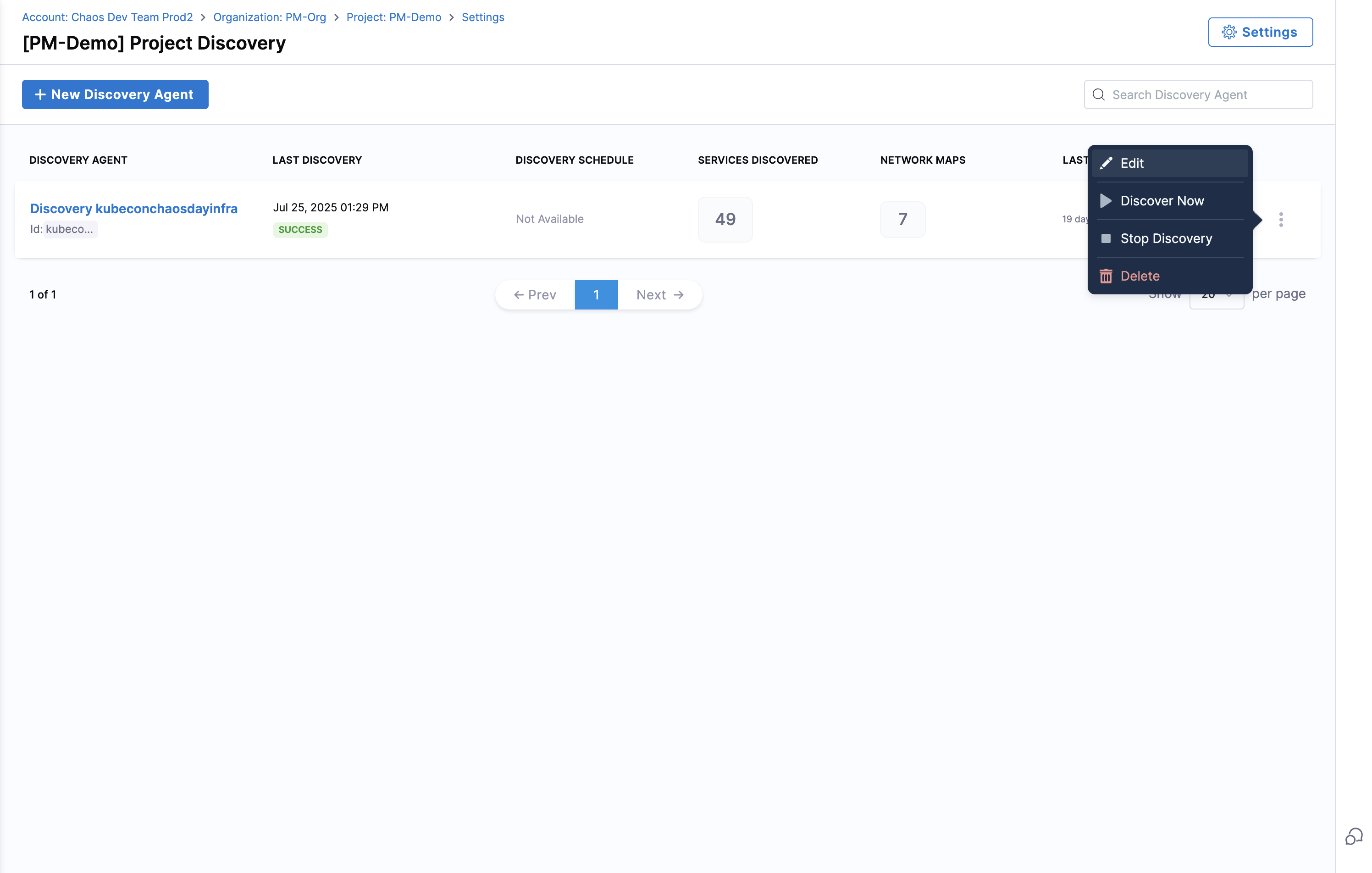

Navigate to Project Settings → Discovery

-

Click the options menu (⋮) next to your discovery agent and select Edit

-

Toggle on "Use static name for configmap and secret" and click Update Discovery Agent

Step 3: Start Running Chaos Experiments

Your GKE Autopilot cluster is now ready for chaos engineering. To create and run your first experiment, follow the Create Experiments guide and choose from any of the supported experiments listed below.

Supported Chaos Experiments

Harness Chaos Engineering provides comprehensive Kubernetes fault coverage. On GKE Autopilot, experiments are categorized based on compatibility with Autopilot's security model.

Supported Pod-Level Experiments

These experiments work seamlessly on GKE Autopilot as they operate within container boundaries:

Container Resource Stress

- Pod CPU Hog: Consumes excess CPU resources of application containers

- Pod CPU Hog Exec: Alternative CPU stress implementation using exec

- Pod Memory Hog: Consumes memory resources causing significant memory usage spikes

- Pod Memory Hog Exec: Alternative memory stress implementation using exec

- Pod IO Stress: Causes I/O stress by spiking input/output requests

Container Storage Operations

- Disk Fill: Fills the pod's ephemeral storage

- FS Fill: Applies filesystem stress by filling pod's ephemeral storage

Container Lifecycle Management

- Container Kill: Causes container failure on specific or random replicas

- Pod Delete: Causes specific or random replicas to fail forcibly or gracefully

- Pod Autoscaler: Tests whether nodes can accommodate multiple replicas

Network Chaos (Container-Level)

- Pod Network Latency: Introduces network delays using traffic control

- Pod Network Loss: Causes packet loss using netem rules

- Pod Network Corruption: Injects corrupted packets into containers

- Pod Network Duplication: Duplicates network packets to disrupt connectivity

- Pod Network Partition: Blocks 100% ingress/egress traffic using network policies

- Pod Network Rate Limit: Limits network bandwidth using Token Bucket Filter

DNS Manipulation

- Pod DNS Error: Injects chaos to disrupt DNS resolution in pods

- Pod DNS Spoof: Mimics DNS resolution to redirect traffic

HTTP/API Fault Injection

- Pod HTTP Latency: Injects HTTP response latency via proxy server

- Pod HTTP Modify Body: Modifies HTTP request/response body content

- Pod HTTP Modify Header: Overrides HTTP header values

- Pod HTTP Reset Peer: Stops outgoing HTTP requests by resetting TCP connections

- Pod HTTP Status Code: Modifies HTTP response status codes

API Gateway/Service Mesh Faults

- Pod API Block: Blocks API requests through path filtering

- Pod API Latency: Injects API request/response latency via proxy

- Pod API Modify Body: Modifies API request/response body using regex

- Pod API Modify Header: Overrides API header values

- Pod API Status Code: Changes API response status codes with path filtering

- Pod API Modify Response Custom: Comprehensive API response modification

File System I/O Manipulation

- Pod IO Attribute Override: Modifies properties of files in mounted volumes

- Pod IO Error: Returns errors on system calls for mounted volume files

- Pod IO Latency: Delays system calls for files in mounted volumes

- Pod IO Mistake: Causes incorrect read/write values in mounted volumes

JVM-Specific Chaos (Java Applications)

- Pod JVM CPU Stress: Consumes excessive CPU threads in Java applications

- Pod JVM Method Exception: Invokes exceptions in Java method calls

- Pod JVM Method Latency: Introduces delays in Java method execution

- Pod JVM Modify Return: Modifies return values of Java methods

- Pod JVM Trigger GC: Forces garbage collection in Java applications

Database Integration Chaos

- Pod JVM SQL Exception: Injects exceptions in SQL queries (Java apps)

- Pod JVM SQL Latency: Introduces latency in SQL queries (Java apps)

- Pod JVM Mongo Exception: Injects exceptions in MongoDB calls (Java apps)

- Pod JVM Mongo Latency: Introduces latency in MongoDB calls (Java apps)

- Pod JVM Solace Exception: Injects exceptions in Solace queries (Java apps)

- Pod JVM Solace Latency: Introduces latency in Solace queries (Java apps)

Cache and Data Store Chaos

- Redis Cache Expire: Expires Redis keys for specified duration

- Redis Cache Limit: Limits memory used by Redis cache

- Redis Cache Penetration: Sends continuous requests for non-existent keys

System Time Manipulation

- Time Chaos: Introduces controlled time offsets to disrupt system time

Node-Level Chaos Experiments

Harness Chaos now supports select node-level chaos experiments on GKE Autopilot that operate within the security constraints of the managed environment:

Node Network Chaos

- Node Network Loss: Injects network packet loss at the node level

- Node Network Latency: Introduces network latency for node-level traffic

Node Service Management

- Kubelet Service Kill: Stops the kubelet service on target nodes

- Node Restart: Performs controlled restart of Kubernetes nodes

Next Steps

Now that you have Harness Chaos Engineering set up on your GKE Autopilot cluster:

-

Create Your First Experiment: Start with a simple Pod CPU Hog experiment with low intensity to test your setup

-

Set Up Application Discovery: Enable Service Discovery in your infrastructure settings and explore Application Maps to visualize your services

-

Add Monitoring: Configure probes to validate your application's resilience during experiments

-

Explore More Experiments: Try network chaos like Pod Network Latency or JVM faults for Java applications